2019-12-26, 02:32 PM

May this be a general thread for discussing HDR from a technical & workfllow perspective for those interested in encoding and creating HDR content.

I'll start out with something I wanna share, a little bit of information regarding nits and the HDR curve and such, based on my own experiments. Also wanna preface this by saying I don't have a HDR display, so this is mostly considerations from a technical perspective.

The usefulness of these thoughts I will explain on the bottom of the post.

Here's my experiment:

Here's what I did:

1. Created a 32bit floating point image in Photoshop, with a custom linear sRGB colorspace (gamma set to 1).

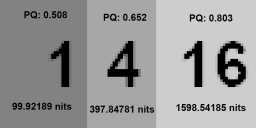

2. Created 3 white rectangles. The darkest one had intensity set to 1. That means that on a normal display it reaches peak brightness. Then I created another one with an intensity of 4, equivalent to a 2 stop overexposure. I could do this thanks to the 32bit floating point space in Photoshop. And then a third one with an intensity of 16, equivalent to a 4 stop overexposure. On my display they all simply looked equally white.

3. Saved this image as an OpenEXR file (supports overbright values >1).

4. Opened this OpenEXR file in After Effects and told AE to interpret it as linear sRGB, like it was saved. Set After Effects project setting to 32 bit floating point with, again, linear sRGB color space. The linear color space is important to arrive at the correct values, gamma only complicates things. In linear color space, 2 simply means twice as much light as 1, 4 twice as much as 2, and so on.

5. Exported as 16 bit TIFF, but made sure to set a Rec2020 PQ HDR ICC Profile for the export. This makes AE automatically convert the superbright (>1) values into the HDR PQ color space. PQ is the same color space that most (if not all) UHD HDR Blu Rays use.

6. The resulting TIFF I opened in Photoshop and interpreted (not converted!) the colors as normal sRGB. This was just done for convenience, so that the image you see above has visible shades, otherwise Photoshop automatically *converts* into sRGB and thus all the shades are pure white again, which for this demonstration isn't very useful, as I wanna see that it actually worked and I ended up with different shades, or in other words, that the superbright detail was actually preserved.

7. Now I hovered the mouse over the different shades while having the mode in the Info-window set to 32 bit floating point. Thus I got the values you can see written on top as "PQ: ". Those are values between 0 and 1, representing the actual RGB brightness in the PQ-HDR-encoded image (or video). Of course that 0-1 would be scaled up to, for example, 0-1023 for 10 bit HDR video, it's merely a different way of displaying the data. I chose the 0-1 representation mainly because of the next step:

8.

Link: https://github.com/colour-science/colour...st_2084.py

This script is part of the colour-science package in Python. It basically converts the raw PQ signal value to nits. This is possible because with PQ HDR (the de facto standard these days) every value in the encoded video corresponds precisely with an intensity in nits. You can see in the screenshot how to call the script, if you want to test it out yourself. I did this with Anaconda in the Anaconda prompt. Details on how to get it working on the bottom of the post.

9. I noted the resulting nit brightness on the bottom in my little graphic on the top of this post.

10. As you can see, there's a clear pattern we can see: A fully bright pixel (intensity of 1) in a 32 bit floating point composition in After Effects corresponds with pretty much exactly 100 nits (probably some rounding errors somewhere). A pixel with the intensity of 4 corresponds with pretty much exactly 400 nits, and the pixel with an intensity of 16 has a brightness of 1600 nits.

Why is this useful?

Simple. Let's say you have some linear footage like, for instance ... a film scan. Typically this scan will be rather dark due to headroom necessary to avoid clipped highlights. In a normal SDR grade you would use some form of curves to compress the highlights without blowing them out, for instance via Gamma, or by hand, in order to get more detail in the shadows.

In an HDR grade, you can do this instead: Make sure you're in a linear color space and 32 bit floating point in After Effects. Apply the "Exposure" filter. Set Exposure to a value that gives you enough detail in the shadows. You will now have blown out highlights, but don't worry, it's all part of the plan. Next, export the video while setting a Rec2020 PQ color profile for the export. After Effects will automatically convert the blown out highlights (which aren't really blown out) into the correct HDR values. You don't even need an HDR display to do this. Great!

And here's where all this information comes in super handy too: In order to make a proper HDR encode that is compatible and works, you need to supply metadata. Most importantly, the peak brightness. This value doesn't affect the image per se, rather it gives TVs a hint on how to remap superbright values if they cannot reach the brightness of the content. Normally you would just have to guess some random value. If you guess too low, you may end up with clipped highlights, because the TV doesn't take them into account when tonemapping. If you guess too high, your image will end up too dark after tonemapping because the TV tries to take into account a very high peak brightness that is never actually reached.

With the information we have though, we can give it the correct value. Let's say you have your linear footage in your composition without any adjustments. Now you know that the brightest possible pixel in the footage will be an intensity of 1. We also know that 1 means 100 nits. Now, if you raise Exposure by, say, 2 stops, via the Exposure effect, you get an intensity of 4 (one stop means the amount of light doubles). And we also know that an intensity of 4 equals 400 nits.

Whatever Exposure adjustment you set under the described circumstances, you can simply multiply it by 100 and get the theoretical peak brightness in nits.

So for instance:

Exposure +1 stop = 200 nits peak

Exposure +2 stops = 400 nits peak

Exposure +3 stops = 800 nits peak

Exposure +4 stops = 1600 nits peak

With the film scans I tested so far, typically a value between 2 and 3 stops seems good to me, so you get a peak brightness somewhere between 400 and 800 nits.

Which coincidentally is in the range that the new Star Wars HDR remasters reach. Many people said it wasn't "true HDR", but I disagree based on the film scans I played around with, it's a pretty believable range for 35mm content. Granted, a scan from the negative might reveal more range in theory, but you wouldn't get that kind of range in the cinema on 35mm, so it's pretty "faithful" in that aspect imo.

How can I do that fancy Python script stuff myself?

I say fancy because I'm a complete Python noob myself, but anyway, here's how I did it:

1. Install Anaconda (it's a kind of Python package/distribution or sth)

2. Run Anaconda Prompt

3. Do this:

4. Run python simply by entering:

5. Do this:

6. and this:

Replace 0.5 with any any PQ intensity (normalized to a range between 0 and 1).

7. Receive the corresponding nit intensity as a result.

Hope this can be useful to someone someday. I'm no expert or anything, but thought I'd share the little I figured out to demystify HDR a little.

I'm no expert or anything, but thought I'd share the little I figured out to demystify HDR a little.

I'll start out with something I wanna share, a little bit of information regarding nits and the HDR curve and such, based on my own experiments. Also wanna preface this by saying I don't have a HDR display, so this is mostly considerations from a technical perspective.

The usefulness of these thoughts I will explain on the bottom of the post.

Here's my experiment:

Here's what I did:

1. Created a 32bit floating point image in Photoshop, with a custom linear sRGB colorspace (gamma set to 1).

2. Created 3 white rectangles. The darkest one had intensity set to 1. That means that on a normal display it reaches peak brightness. Then I created another one with an intensity of 4, equivalent to a 2 stop overexposure. I could do this thanks to the 32bit floating point space in Photoshop. And then a third one with an intensity of 16, equivalent to a 4 stop overexposure. On my display they all simply looked equally white.

3. Saved this image as an OpenEXR file (supports overbright values >1).

4. Opened this OpenEXR file in After Effects and told AE to interpret it as linear sRGB, like it was saved. Set After Effects project setting to 32 bit floating point with, again, linear sRGB color space. The linear color space is important to arrive at the correct values, gamma only complicates things. In linear color space, 2 simply means twice as much light as 1, 4 twice as much as 2, and so on.

5. Exported as 16 bit TIFF, but made sure to set a Rec2020 PQ HDR ICC Profile for the export. This makes AE automatically convert the superbright (>1) values into the HDR PQ color space. PQ is the same color space that most (if not all) UHD HDR Blu Rays use.

6. The resulting TIFF I opened in Photoshop and interpreted (not converted!) the colors as normal sRGB. This was just done for convenience, so that the image you see above has visible shades, otherwise Photoshop automatically *converts* into sRGB and thus all the shades are pure white again, which for this demonstration isn't very useful, as I wanna see that it actually worked and I ended up with different shades, or in other words, that the superbright detail was actually preserved.

7. Now I hovered the mouse over the different shades while having the mode in the Info-window set to 32 bit floating point. Thus I got the values you can see written on top as "PQ: ". Those are values between 0 and 1, representing the actual RGB brightness in the PQ-HDR-encoded image (or video). Of course that 0-1 would be scaled up to, for example, 0-1023 for 10 bit HDR video, it's merely a different way of displaying the data. I chose the 0-1 representation mainly because of the next step:

8.

Link: https://github.com/colour-science/colour...st_2084.py

This script is part of the colour-science package in Python. It basically converts the raw PQ signal value to nits. This is possible because with PQ HDR (the de facto standard these days) every value in the encoded video corresponds precisely with an intensity in nits. You can see in the screenshot how to call the script, if you want to test it out yourself. I did this with Anaconda in the Anaconda prompt. Details on how to get it working on the bottom of the post.

9. I noted the resulting nit brightness on the bottom in my little graphic on the top of this post.

10. As you can see, there's a clear pattern we can see: A fully bright pixel (intensity of 1) in a 32 bit floating point composition in After Effects corresponds with pretty much exactly 100 nits (probably some rounding errors somewhere). A pixel with the intensity of 4 corresponds with pretty much exactly 400 nits, and the pixel with an intensity of 16 has a brightness of 1600 nits.

Why is this useful?

Simple. Let's say you have some linear footage like, for instance ... a film scan. Typically this scan will be rather dark due to headroom necessary to avoid clipped highlights. In a normal SDR grade you would use some form of curves to compress the highlights without blowing them out, for instance via Gamma, or by hand, in order to get more detail in the shadows.

In an HDR grade, you can do this instead: Make sure you're in a linear color space and 32 bit floating point in After Effects. Apply the "Exposure" filter. Set Exposure to a value that gives you enough detail in the shadows. You will now have blown out highlights, but don't worry, it's all part of the plan. Next, export the video while setting a Rec2020 PQ color profile for the export. After Effects will automatically convert the blown out highlights (which aren't really blown out) into the correct HDR values. You don't even need an HDR display to do this. Great!

And here's where all this information comes in super handy too: In order to make a proper HDR encode that is compatible and works, you need to supply metadata. Most importantly, the peak brightness. This value doesn't affect the image per se, rather it gives TVs a hint on how to remap superbright values if they cannot reach the brightness of the content. Normally you would just have to guess some random value. If you guess too low, you may end up with clipped highlights, because the TV doesn't take them into account when tonemapping. If you guess too high, your image will end up too dark after tonemapping because the TV tries to take into account a very high peak brightness that is never actually reached.

With the information we have though, we can give it the correct value. Let's say you have your linear footage in your composition without any adjustments. Now you know that the brightest possible pixel in the footage will be an intensity of 1. We also know that 1 means 100 nits. Now, if you raise Exposure by, say, 2 stops, via the Exposure effect, you get an intensity of 4 (one stop means the amount of light doubles). And we also know that an intensity of 4 equals 400 nits.

Whatever Exposure adjustment you set under the described circumstances, you can simply multiply it by 100 and get the theoretical peak brightness in nits.

So for instance:

Exposure +1 stop = 200 nits peak

Exposure +2 stops = 400 nits peak

Exposure +3 stops = 800 nits peak

Exposure +4 stops = 1600 nits peak

With the film scans I tested so far, typically a value between 2 and 3 stops seems good to me, so you get a peak brightness somewhere between 400 and 800 nits.

Which coincidentally is in the range that the new Star Wars HDR remasters reach. Many people said it wasn't "true HDR", but I disagree based on the film scans I played around with, it's a pretty believable range for 35mm content. Granted, a scan from the negative might reveal more range in theory, but you wouldn't get that kind of range in the cinema on 35mm, so it's pretty "faithful" in that aspect imo.

How can I do that fancy Python script stuff myself?

I say fancy because I'm a complete Python noob myself, but anyway, here's how I did it:

1. Install Anaconda (it's a kind of Python package/distribution or sth)

2. Run Anaconda Prompt

3. Do this:

Code:

pip install colour-scienceCode:

pythonCode:

import colour as colourCode:

colour.models.eotf_ST2084(0.5)7. Receive the corresponding nit intensity as a result.

Hope this can be useful to someone someday.

I'm no expert or anything, but thought I'd share the little I figured out to demystify HDR a little.

I'm no expert or anything, but thought I'd share the little I figured out to demystify HDR a little.